A recent article in the Atlantic magazine highlighted aspects of engineering tunnel vision resulting in proliferation of code, tools, systems, and standards, and failures in their implementation.

There are two distinct threads running through this, firstly the need for simplifying and structuring the number of systems, code base, tools and standards applied interchangeably in solving identical problems, and achieving greater reliability in doing so.

Secondly, the need for creating user responsive capability in developing software solving real-world problems, in industry, health, science, rather than simplifying petty inconveniences, ie. as in “apps” development, for improved delivery services, or social media.

We are reaching the stage where experimenting with and applying various tools, resulting in an identical solutions to similar problems, provides indistinct value, unless tempered with an underlying laser like focus on convergence to user needs.

How many coding systems, applications, ecosystems, and standards do we really need to make things happen?

It took over 2 million years to develop the hammer in its present form, but software acceleration is faster, dispersed and mechanistic in nature, and does not always reflect comparative progress in usability and function.We may die if avionics fail. We will not go hungry, or freeze, if we don’t download the next app.

Coding as an intellectual exercise, brings with a narrow mechanistic focus, offsetting any cross pollination benefits.

While keeping people in work and a vibrant consulting & training industry in shape, the focus on technology as opposed to applying thought & rigor to practice, has led to a great deal of inertia in progressing the cause of useful coding, tools, systems, and usability.

This is evident for example in the “agile” development practices seen as panaceas addressing inertia in applying technology to business problems.

Instead of focusing on the drivers, and high level objectives, supported by a structural, methodical automation of a core set of capabilities, an approach distancing requirements from technology is followed.

While it is certain that the agile manifesto encapsulates many of the usability objectives laid out, in practice the gap between an understanding of value, structure, and the resulting inadequate code grows, in proportion to effort invested in responding to “pragmatic” deadlines.

The point is that less code is better code, and code shaped by converged design, comprehensive reasons for existence (ie. requirements), and method in application, is even better.

Fortunately there are a number of such abstractions, and this brings the next point into view.

What constitutes a useful “converged” abstract model ? It appears that as with the development of the hammer, so it is that in computing there are certain ways of shaping the link between mathematics and usability, progressing in a certain direction which works.

One such example is the development of the *NIX systems, initially developed in 1970 which after 40+ years are still relevant and used in the majority of current communication and computing infrastructures.

Another, and this is standards focused, is the work done by OSI, shaped from ITU founded in 1865, resulting in the OSI/Network management forum in 1988 which in turn morphed into TM Forum set of standards widely used today by service providers today as reference for designing communication systems .

This work shows the need for abstractions in structurally solving a range of human problems in communicating information, involving extensive investment in engineering and software development.

With the coming of internet, and subsequently the web, software tools proliferated in automating standard communication, adding and information processing functions (HTML, CSS, Javascript). The IETF governed these new standards, independently from those practices inherited from the past.

Applications became less demanding in implementing code for responsive, interactive software, and less thought given to generalizations and principles, as these were set in the standards, and tools.

The problem arose when proliferation of standards, tools and applications led, on the one hand to a lack of structure and method in coding practice and secondly to a multiplying and divergent effect.

It takes more effort to think carefully about a set of problems, and structure, leading to a successful solution, than to implement a program providing immediate results. Additionally, when proliferation of tools occurs, a lot of energy is invested in mastering these rather than in careful design and coding practice.

As developers and engineers face new needs, and challenges , they constantly seek new ways of doing things, as competitors they seek new ways of limiting their opposition through gazumping their technology, in short “cheaper, better, faster” , roughly aligned to cost , quality and efficiency – for the foreseeable future software will proliferate, so the best possible approach is to govern the process of making software, rather than limit a growing process.

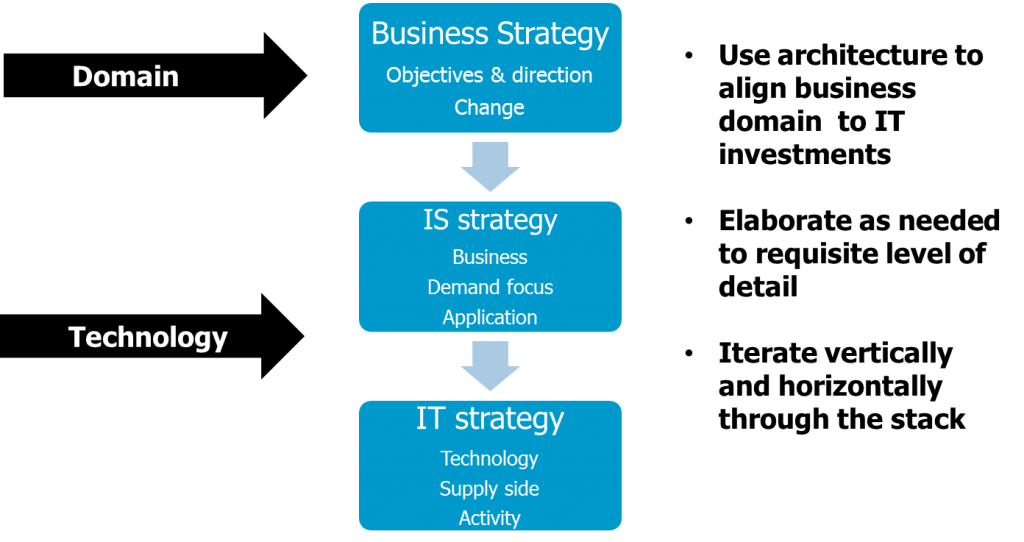

Abstract architecture and governance methods, interactive modelling & visualization languages,and data representation can go a long way in focusing attention on business and human value, before any code is produced, thus reducing software proliferation, increasing quality, while questioning the value of implementation objectives.

Architecture and abstraction methods exist and provide cost saving & efficient solutions to real-life problems – they support & set the stage for users, and stakeholders understanding and setting objectives, and guide subsequent coding practice.

While in tension with the need to pragmatically deliver results, they guide and shape better, risk-free software systems, solving real business problems.